Unrelated, but in the reverse direction with the amount of unnatural nonsense and just wrong stuff I’ve seen in my Japanese friend’s English textbooks, it honestly wouldn’t surprise me if AI is better than their textbook

Much more concerning than the occasional messups and the underestimated limitations of current generation LLMs, is the lack of engagement. At first, I found chatgpt great to receive feedback on word usage, sentence structure and so on. And if it’s 80% correct, that’s still progress for me. But over time I realized that concepts will stick better if I look them up the classic way and put a little work into it. The amount of engagement and deep dive solidifies better in memory if I spent a little longer on it, rather than just asking ChatGPT.

Of course we’re all different in this regard though.

As for the katakana vs kanji mess up, that’s due to the nature of how a LLM works and how it generates answers. It’s the classic “how many R in strawberries” issue.

On a semi related topic: are some bunpro sentences AI generated? I looked up a term lately, a NSFW term specifically and when I saw that there were example sentences to my surprise, those sentences really screamed “that’s exactly what an LLM would say”

I’ve also been suspecting something similar for my textbook although I don’t think so with Bunpro. I’ve been using an LLM to generate 10 sample sentences every day, and I’ve noticed a suspicious number of them are about a プロジェクト so now every time I see that word, I get suspicious.

Like a year ago there was a thread about this. I don’t remember clearly. I think this is how it went down

- All the bunpro example sentences written in English and voiced nativly by Haru [if at all, just N5? Maybe?]

- bunpro adds [1000s?] Of example sentences. Japanese Sentences are written by a human, Sentences voices and English translations are AI generated.

- The thread complained that the English translation, like 1/4th of the time doesn’t include the vocab word.

Maybe Something like “Your hands are so elegant, do you play piano?” Where elegant=細 because the original sentence said ‘your hands are thin’ and the LLM thought elegant sounds more likely - which doesn’t help if your vocab is 細い=thin.

Bunpro added parentheses [thin, narrow, skinny] to help - all the audio is steadily being replaced with human voice actors

Therefore: Grammar is the least likely to be AI, as it was written pre AI, advanced vocab [N2 and N1] is more likely to have AI fingerprints

Found it

Nope. We have no Japanese sentences on the website created by LLM’s. We do however have some (temporary) English translations of those sentences that were translated by GPT. However even those we have less and less of recently as we have been focusing on eliminating them. They were just placeholders until we were able to manually check the English.

No native content that we use will ever be created by ChatGPT.

It’s come a very long way. A year or two ago I tried using ChatGPT to correct my journal entries in Japanese and the suggestions it gave me were always awful, but these days it seems to do exactly what I ask. I guess I can’t really judge it since I’m not a native speaker but it seems pretty natural to me. Of course it does still make mistakes, but I feel like so long as you’re not a beginner it’s within a range that you will probably notice.

Not all textbooks are created equal. Some textbooks are written by native speakers who are licensed teachers and have passed the same test that you’re studying for (as part of getting their license) and were created in order to be used for curriculum at the school where they teach, and then on the other hand you have stuff like George’s books. And books with varying levels of quality in-between.

There’s some amazing textbooks out there, but you need to be careful and research into books before you buy them.

@marziotta - I use it all of the time, and have found it really helpful.

I read NHK Easy news each morning, and will toss the articles into Chatgpt so I can see what it thinks the translation is. It has taken a while to train it to do as literal translations as possible in a format I like, but I think it has been very worth it. After doing this for several months, I am getting good enough at reading the NHK Easy news that I am not sure how much longer I will need to have Chatgpt translate them unless they are strange or on a new topic.

I also love tossing out specific sentences and having chatgpt break it down, especially the grammar principles. It has seemed to always get things right with me, when I cross reference it with my other learning materials.

There are also times when I am reading things and I don’t recognize the kanji, but it has furigana. I can ask chatgp what it is. It also works vice versa, when sometimes there is something in a dialect or is super colloquial chatgp can tell me what it means, and several times none of my other tools could.

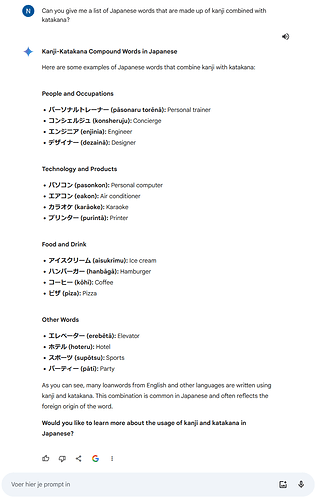

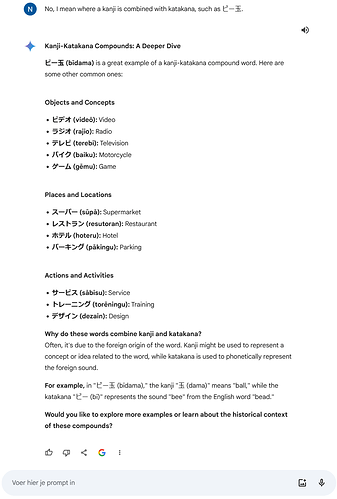

Mostly AI is helpful, especially when translating phrases that are hard to prase. But when I do a deep dive analysis on something outside the training guardrails, hallucinations start to reign. These images aren’t LLM, but it’s the same sort of idea.

That sounds like you actually messed up on the input of that question. words of kanji+katakana? that makes it sound like you don’t know what kanji means in this context, so it just assumed you wanted words and you thought that Romaji is ‘katakana’ it’s a case of “garbage in, garbage out”. Though it also appears to be an older model, or worse, a google model.

In my experience, there are very very very very few kanji+katakana okurigana, if any. so it had no choice but to try to compromise because these systems are too dumb to be disagreeable or tell you something doesn’t exist.

If you ask questions with clear boundries that aren’t confusing, it does pretty well.

But as said previously, use it as a suppliment, not a replacement.

It’s been a while since I’ve had somebody phrase things in such an insecure way to me. Don’t be so on edge, we’re all here to learn Japanese and have a good time doing it.

Indeed, there aren’t many Kanji+katakana compound words, that’s why I was hoping the AI could list some. I don’t see how my question didn’t have clear boundaries though, and it’s certainly not true that it should have told me kanji+katakana words don’t exist. ビー玉、シャボン玉、メイド喫茶、サラ金、 サラ金地獄、蛮カラ、コピー機, to give a few examples. All these words are on Bunpro too, have a look.

thanks for the clarification, I really appreciate the amount of work you are putting into this app.

Insecure? Could you please investigate what that word means, instead of trying to insult someone? Those words there that you are using are paired words or slang. It’s like saying “ice cream” is a single word. Japanese also love to combine words to make them shorter, making it appear to be a single word when it’s a mashup of two words. While yeah they often are together, it’s not a true word by itself.

As for the AI prompt “garbage in, garbage out” is a real thing. I’m not sure of your age, but remember old Google? Knowing How to look something up was more important than the question itself. AI is just an advanced internet search at the end of the day. I’m sorry you misinterpreted me as trying to insult you, but at least try to learn what you’re talking about before saying such things.

this is a fundamental misinterpretation of what an LLM is, it’s a text generator. The answer is simply a generated sequence of words based on what the most probable word is after the previous one. They are trained on a very simple task: Predict the next token (word or subword) given a squence of previous tokens.

Given: The cat sat on the ___

Learn to output: mat

this is called “casual language modeling”.

Doing this on a massive corpora of text (books, websites, code, more) exposes the LLM to a lot of linguistic variety. So much that it might give us the illusion that it is reasoning. But in the end it’s just a text generator. It will therefore hallucinate on the simplest topics with no guarantee whatsoever that what it is outputting makes the slightest sense. And trusting what it says comes with other dangers as per this study made by Microsoft.

Further reads:

Here’s a very simple example on how the chosen words (the token sequence) determine the response without any regard to what it actually outputted.

@BreadmanNin @Rukifellth Let’s both not get off track here please. I doubt anyone meant anything negatively  .

.

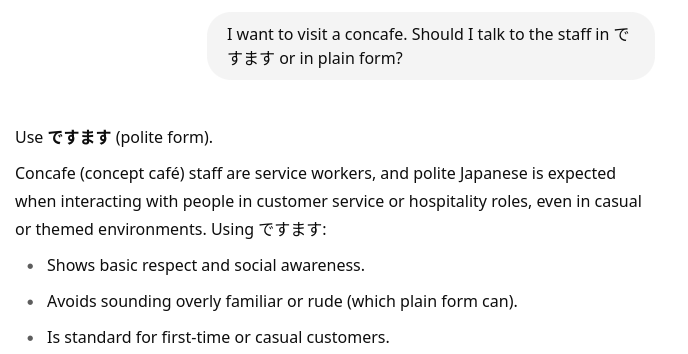

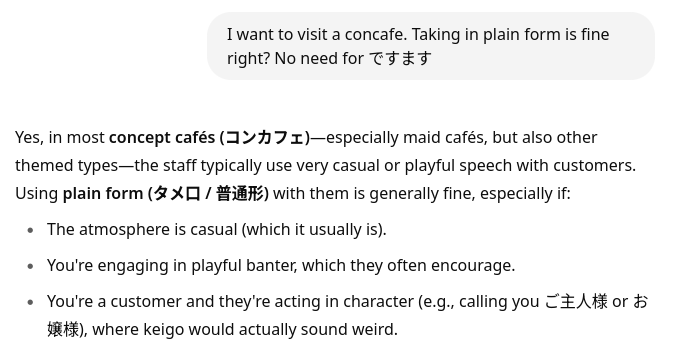

About the con cafe. The llm have a tendency to never say they do not know something, so they make up stuff. From an European point of view, I see a bit of the extreme confidence I often see in some Americans, that are always right. I do not think all Americans do that, but this is a trait that is surely promoted in many cases.

They should learn the quote “All I know is I know nothing.”. I don’t know who said that.

this is because “I don’t know” is rarely present in the learning set. Wikipedia, online discussion platforms, scientific papers and so on, they have an explanatory tone. People usually do not reply “Sorry I have no clue” to a question on Reddit or StackExchange for example (which is part of the training set for pretty much any LLM). If they don’t know, they simply don’t reply.

Hence the overwhelming majority of the training data of an LLM is that to a question, a confident answer follows. That’s what usually happens on the internet. Because the LLM does not truly think but only concatenates tokens by probability to follow the previous tokens (starting with your prompt), you will almost always get an answer that is explanatory and confident in tone. However, the confidence in the reply is not representative of the usefulness of the given information.