thanks for the clarification, I really appreciate the amount of work you are putting into this app.

Insecure? Could you please investigate what that word means, instead of trying to insult someone? Those words there that you are using are paired words or slang. It’s like saying “ice cream” is a single word. Japanese also love to combine words to make them shorter, making it appear to be a single word when it’s a mashup of two words. While yeah they often are together, it’s not a true word by itself.

As for the AI prompt “garbage in, garbage out” is a real thing. I’m not sure of your age, but remember old Google? Knowing How to look something up was more important than the question itself. AI is just an advanced internet search at the end of the day. I’m sorry you misinterpreted me as trying to insult you, but at least try to learn what you’re talking about before saying such things.

this is a fundamental misinterpretation of what an LLM is, it’s a text generator. The answer is simply a generated sequence of words based on what the most probable word is after the previous one. They are trained on a very simple task: Predict the next token (word or subword) given a squence of previous tokens.

Given: The cat sat on the ___

Learn to output: mat

this is called “casual language modeling”.

Doing this on a massive corpora of text (books, websites, code, more) exposes the LLM to a lot of linguistic variety. So much that it might give us the illusion that it is reasoning. But in the end it’s just a text generator. It will therefore hallucinate on the simplest topics with no guarantee whatsoever that what it is outputting makes the slightest sense. And trusting what it says comes with other dangers as per this study made by Microsoft.

Further reads:

Here’s a very simple example on how the chosen words (the token sequence) determine the response without any regard to what it actually outputted.

@BreadmanNin @Rukifellth Let’s both not get off track here please. I doubt anyone meant anything negatively  .

.

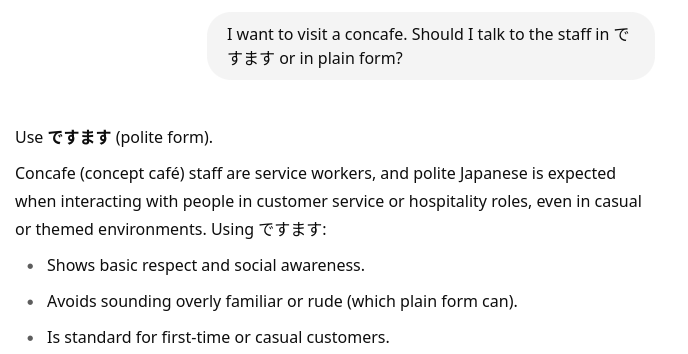

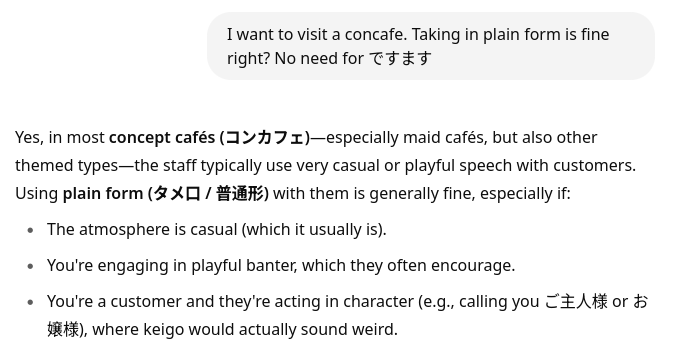

About the con cafe. The llm have a tendency to never say they do not know something, so they make up stuff. From an European point of view, I see a bit of the extreme confidence I often see in some Americans, that are always right. I do not think all Americans do that, but this is a trait that is surely promoted in many cases.

They should learn the quote “All I know is I know nothing.”. I don’t know who said that.

this is because “I don’t know” is rarely present in the learning set. Wikipedia, online discussion platforms, scientific papers and so on, they have an explanatory tone. People usually do not reply “Sorry I have no clue” to a question on Reddit or StackExchange for example (which is part of the training set for pretty much any LLM). If they don’t know, they simply don’t reply.

Hence the overwhelming majority of the training data of an LLM is that to a question, a confident answer follows. That’s what usually happens on the internet. Because the LLM does not truly think but only concatenates tokens by probability to follow the previous tokens (starting with your prompt), you will almost always get an answer that is explanatory and confident in tone. However, the confidence in the reply is not representative of the usefulness of the given information.

Exactly this! I’m a 3D artist and the first thing I had to learn was, ALWAYS use reference images. That is literally an industry standard workflow. The reference either comes from photos other people have taken, from paintings other people have painted, or from 3D art other people have sculpted/modeled.

People that create moves, music and other forms of media didn’t create those things out of thin air, they were all influenced by things that they’ve heard and watched in their lives.

At least you are able to tell an explanation was bad, or you’ve read reviews saying that textbook X provides better explanations than textbook Y. With AI, you are alone talking to a bot. If you want to confirm something, you fall back to a textbook or google.

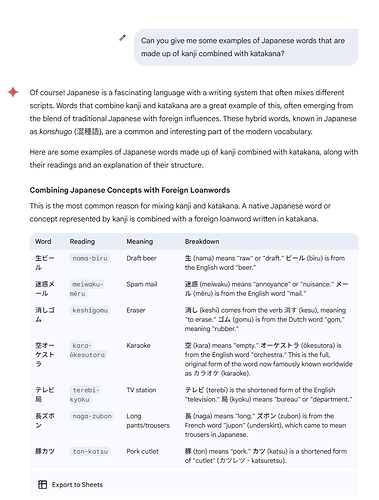

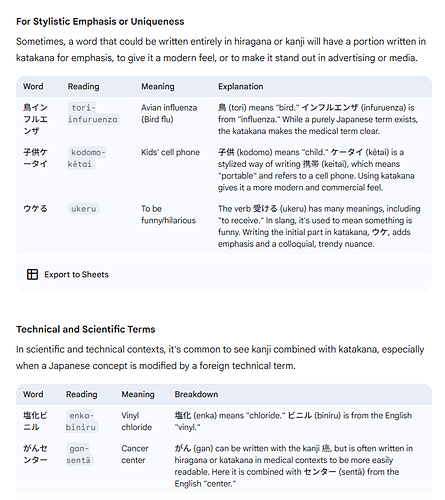

I find Claude pretty invaluable for asking questions like “Can you unpack the grammar in this Japanese sentence? [sentence]. I don’t understand the role that [word] is playing.” It usually gives an excellent explanation in response to that kind of thing.

Don’t get into a long conversation with it though, just start a new chat, ask the question, get the answer, and move on. The longer the conversation gets, the more likely it is to start making up random stuff, in my experience.

Also, for complicated or obscure questions (especially about Japanese culture), it helps if you say “answer first in Japanese and then in English.” The Japanese explanation will usually be better than the one it would give in English because it’s predicting what a Japanese speaker would say instead of predicting what an English speaker would say. So if it writes it in Japanese first and then translates it can give higher quality answers.

I’m very torn on AI use. I do sometimes use it both for language learning and my programming job, but in both cases I find that you should absolutely never blindly trust it. So for instance I find that generative AI can be pretty ok to generate code boilerplate if I already know what I expect and I can easily check the output for correctness.

For learning a language however that’s trickier, because by definition you probably don’t have the skill level required to vet the answers and identify incorrect or inaccurate statements. I still use it from time to time when I really struggle to parse a sentence in something I read, it usually does a good job at pure translation and a somewhat worse job at explaining the grammar.

But I would absolutely never trust it with a prompt like “can you explain Japanese い-adjective conjugations to me” or something similarly generic. I know some people use AI like they would a textbook and that seems like a terrible idea to me. Even generating text in Japanese for reading practice for instance seems so odd to me, you have access to a virtually infinite amount of Japanese for basically any level online, why risk having AI generate something broken?

Even generating text in Japanese for reading practice for instance seems so odd to me, you have access to a virtually infinite amount of Japanese for basically any level online, why risk having AI generate something broken?

That happens with any language, even English. LLM are just statistical models, they regurgitate whatever was fed to them. They over use words (“Why Does ChatGPT ‘Delve’ So Much?”: FSU researchers begin to uncover why ChatGPT overuses certain words - Florida State University News), they don’t know if the output is correct or not (it has no concept of “knowing”, even), and they’re programmed to say whatever we want them to say, which is not to say that they’re programmed to say the right things.

In some models, even if it gives you the right explanation and you wrongly correct them, or pose a question in a certain way, it will “say” that they were wrong and wrongly correct themselves, just because that’s what you were looking for.

Yeah as has been pointed out in this thread already, a huge issue with these language models in my experience is their inability to answer “I don’t know” or even ask follow-up questions when they lack information. Instead they just write out a plausible-sounding but sometimes completely wrong answer.

And the more niche the topic the worse it gets. That’s why it looks really impressive when you just ask simple questions as the model will usually have ample enough data to reply something coherent. But as soon as you start getting into trickier topics the results can get really poor really quickly.

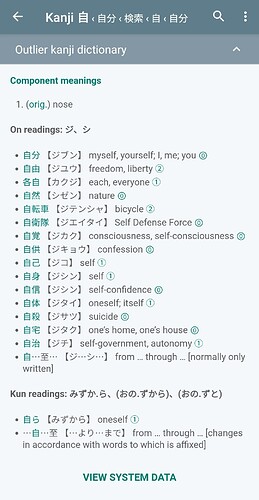

I usually just ask it what the nuances are between two words. Like “How is 自分 different from 私 or 僕?”

The answer it gave sounded pretty legit to me.

「TL;DR:

Use 私 when being polite and neutral

Use 僕 if you’re male and in a friendly or soft setting

Use 自分 when you’re emphasizing responsibility, identity, or doing something yourself」

There was obviously more to this, but it all made understanding 自分 easier. It even added in about 俺 when explaining 僕.

At the end of the day, I follow-up with many resources. I like Bunpro, but I also find it to be aggravating at times. Without having an actual 日本人 spending time teaching me Japanese, from an area I might go to, every resource has its pros and cons.

If that’s what it said that’s actually a pretty terrible explanation for 自分 IMO. The key for 自分 is that it’s not a first person pronoun, it’s a reflective pronoun, so it can be applied to third persons unlike 私、僕、俺、etc… The closest equivalent in English would be “oneself”. There’s more to it but if I was to give a quick explanation that’s certainly where I would start.

I’ll repeat what I said above and assert that for these very simple questions you’re almost always better off just searching for articles online before rolling dice with AI, for instance:

Amusingly while looking for other articles I found this one which seems to be mostly or entirely AI-generated and of extremely low quality as a result:

While I didnt ask it to define 自分, if that’s what your implying, it did say those things too. Overall, I think it deepened my understanding, and clearly showed me the differences. I actually wasn’t trying to really figure out 自分. I was trying to figure out:

I saw the “I, me, you” at the tail end of the first word, and wondered what made 自分 different.

I admit, I could have put a little more emphasis on that was a snippet of what it said, but if you were trying to convince me that it was worthless, all you did was confirm that I should use multiple resources.

Thank you for the Tofugu link, that is something I don’t utilize very often.

Just a few years ago we only had to worry about bad search results from content farms, now it’s also LLM content farms…

I wonder if there is/will be an equivalent of ublock for search engines: a shared list of domains I can just forever remove from my search results.

there is and it works fairly well: ublacklist with shared AI content lists

I mainly use it to exclude AI generated images from my google images results

but I also use it to remove stuff like Quora or Pinterest in general from all results