Very interesting concept, I can only hope the various issues (like increasing strength for words that weren’t used or such) will be ironed out, because this can no doubt be a very nice tool to try and strengthen my output. Will definitely be testing it and reporting issues I find!

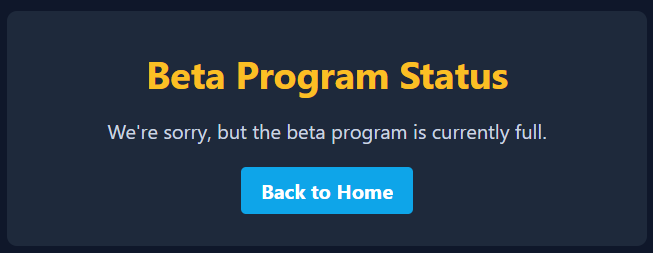

I think so, or at least I couldn’t sign up for beta testing:

one small suggestion! when we reach our daily goal, it would be nice if we got some sort of notification. i ended up doing a bunch of extra sentences, which isn’t a bad thing, but if i just want to hit my goal specifically, i can end up on the website for longer than anticipated!

Yeah, sorry to everyone signing up now - for now I do not want to have more than 50 beta users, especially while I’m ironing out initial issues. I’ll try to see in the next few days if I can open up to a few more people and report here.

That’s a great idea! I’ll try to add in general some indication of where you are in your work towards your daily goal in the practice session UI (currently it’s a bit annoying that you can only see it when you go back to the dashboard). I’ll try to fix it this weekend.

Good job, but a couple of notes:

- I would argue that translation is not output (or reading/listening comprehension). Translation is a whole different skill.

Not all bilingual people make good translators because it’s a learnt skill. So I’d say this is an app to practice translation to Japanese. It’s probably useful to those who want to practice that specific skill.

When I speak a foreign language I don’t think first in my native language and then translate it to the target language, and vice-versa if I’m listening or reading. - And I would strongly advise against using LLM to produce and/or translate sentences, especially if they are not reviewed by humans. Please refer to discussion on AI here for more insights. LLM don’t know what they’re telling you, LLM don’t have the concept of “Japanese”, “English” or what’s correct or not, and they will “lie” to you. They are just statistical models.

Thank you for the notes.

-

I completely agree. I have some ideas for how to try to improve this (e.g., give the user more open ended prompts like “tell me about your day” or “you are at a store trying to buy X, what would you say to the shop attendant”. However, I ended up going with translations for now because that’s way it’s a bit easier for me to control what vocabulary you’ll have to use (I wanted the app to force me to use vocabulary that I’m less familiar with). I’m aware that it’s not a perfect solution (and it becomes even more apparent in high levels like N2-N1 where I’m finding it hard to translate some of these sentences even to my native language).

-

Thank you for pointing me to that interesting discussion! I agree that users should be wary of AI answers in general and I try to give proper warnings on the website (maybe I should add more). I think as a quick way to put some extra practice I found this helpful (for myself) and in most cases I found that it does give me helpful advice when I’m making mistakes.

Thank you for the app !

One minor request : would it be possible to have a counter of how many sentences you’ve done ? Right now, you can choose a goal, but the app lets you practice up to 20 sentences a day, no matter what you choose. That’s fine, but for pacing purpose, just showing how many sentences you’ve done so far would be nice.

Really hoping that the idea of this app goes on further. I find it a bit odd some people usually advice to learn “how to think in japanese first”, but translating a sentence from english to japanese can also be just as valid to evaluate comprehension. Looking forward for new openings in the beta testing!

Thank you for the suggestion! There’s a progress bar that shows how many sentences you’re done in the main page, but I just added it to the practice session as well (during feedback). This was also suggested by @koyakoyakoya

Thank you for adding the progress bar to the review screen !

A question : when testing for a level, it seems like only the vocabulary for this level is taken into account (for exemple, only N3 vocabulary if you’re practicing for N3). What happens for N5/N4 words you might be using ? In the future, could there be a way to test multiple levels (i.e : set goal as 5 N5 level sentences + 5 N4 level sentences + 10 N3 level sentences ? Basically like Bunpro Decks, where you can set a different goal for multiple decks and study them at the same time ?)

And once again, thank you for the app ! Even though it can be argued that translation and production are two different things, there are simply NO translation apps to be found anywhere. Clozes (and Duolinguo-style sentence rearranging) are not the same. I do realize that the grammar-translation method has fallen out of favor many years ago, but immersing (that is, reading/hearing but on the whole passive understanding) doesn’t magically make you able to produce japanese, and not every one has access to regular speaking partners, either because of location or work/life schedule… Your app fills a huge gap (at least one I’ve been looking for for a long time…)

That’s fair, and personally I’m not learning from an LLM, but this is the whole premise of the application. So this advice is basically not far from “stop developing this app”

How would you advice to approach it instead? A fixed list of sentences and a couple of permutations of translations, akin to duolingo or bunpro but with free-form input? That’s a mountain of expensive human work for a fraction of coverage.

If the premise of the application is providing potentially wrong sentences to its users, I personally don’t think it should exist. That’s not to say an app can’t have any errors (there’s a bug report button in bunpro for a reason), but it’s not by design and it’s much more likely to be wrong if a computer hallucinates.

That said, if the idea is to have LLM producing sentences and their translations, here’s what I would do:

- LLM “creates” a large bank of sentences and their translations

- This sentence-translation bank is reviewed and perhaps annotated by humans (especially considering the same sentence can be translated in multiple ways, with different nuances)

- Users try to translate sentences during normal use of the app

- The app does not attempt to correct the sentence, but provides the translation it generated, reviewed and annotated by humans

- The user marks their own solution as correct / needs improvement / incorrect

I believe this would greatly mitigate the dangers of having incorrect computer-generated content.

This is a great concept and project, very helpful, and a great way to put studies into practice. I’m glad I was able to create an account during beta testing.

I wouldn’t pay any attention to anyone who doesn’t complement their studies with several tools and resources, with anything that adds to their progress and motivation. That being said, your initiave is absolutely awesome and definitely worthwhile using. Thank you

That’s fair. I’ll try to formulate my own thinking about it though I’m also struggling with understanding how I feel about all this. Let’s say you are currently learning Japanese. Your roommate and best friend is a pretty good Japanese speaker as a second language, but not a native speaker (let’s say their Japanese is about the same level as my English). They definitely make mistakes and you can’t fully trust their advice, but they are available to you almost all the time. You also have much more reliable sources (e.g., a Japanese teacher on italki, or your Japanese coworker) but your access to them is much more limited (e.g. you have a lesson with them once a week, or you only see them at work and feel much less comfortable asking them for advice about the language).

Would you avoid speaking Japanese with your roommate because they might give you the wrong advice? Or will you prefer to speak Japanese with them during the day to practice, since they are fully available and know Japanese pretty well, but know that you shouldn’t rely on them exclusively?

I think for me my approach to using LLM with my language studies is like getting advice from this friend/roommate. I know I can’t fully trust them, but I can still get some practice and useful information from them as long as I take it into account.

The difference is that your roommate can tell you when they don’t know something, and you can see them struggling to come up with sentences, so you know when they’re not completely sure.

LLMs hallucinate as easily as they provide correct answers, and they don’t know the difference (LLMs don’t have the concept of “knowing”). Even worse, they are often confidently wrong and you will not find out unless you look it up or you already know the answer. Even if they are correct, they can be “convinced” that they are wrong and change their answer.

They are just statistical models. Duolingo was mentioned as an example earlier ITT. Well, many duolingo users are not satisfied exactly because of the issues I’m listing:

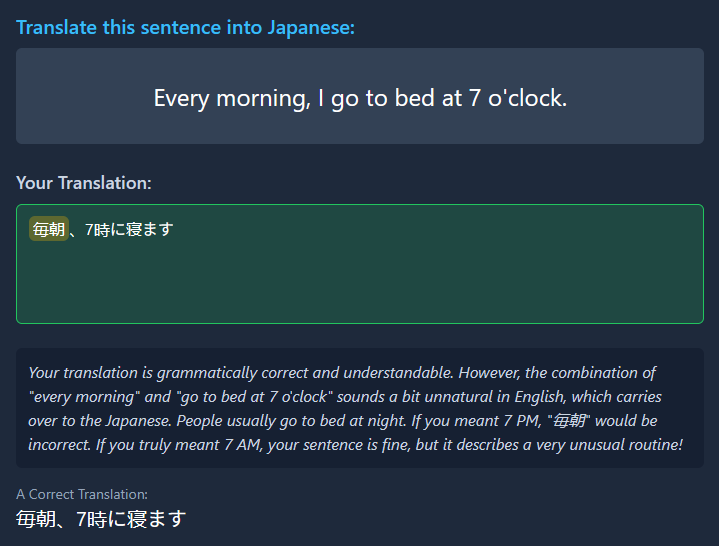

So this app has taught me my grasp on particles when writing actual full on sentences isn’t quite as strong as I had previously believed  but that’s okay!

but that’s okay!

However, a nice improvement would be if the correction blurb thingy included some contrasting examples and explained what the actual difference in nuance becomes as a result of your incorrect/unnatural choices.

Of course, maybe my grasp on particles is already perfect and the LLM is just hallucinating incorrect or unnecessary edits in an effort to dilute my actual knowledge and make my Japanese worse. If media have taught me anything, it’s that the machine is always out to get me  (I’m mostly joking…mostly…)

(I’m mostly joking…mostly…)

Thank you everyone who has given feedback and reported bugs here and through messages. It’s been really helpful!

I’ve done a few fixes the last few days based on reports from users:

- Many tweaks to the AI tutor instructions to improve the translation feedback.

- Fixing bugs: mainly time-zone related issues, and problems with the sign-ups and onboarding.

- Some tweaks to the UI including adding the goal-progress meter to the practice page.

There are still many things I want to fix or add, but I’ll be traveling abroad for a few weeks, so Sakugo development will be on the back burner until I’m back in early August (I’ll still fix critical bugs during that time).

I just opened 10 additional beta spots in case anyone else wants to try it out. If you’re already signed up, you can click the “Click here to see if spots have opened in the beta program” message on the homepage to join (or go to https://sakugo.app/join-beta). I’m really sorry to everyone still waiting — since the backend LLM calls cost me money, I can’t open it to everyone just yet.

That’s hilarious

I like how it commented on how the English sentence is unusual without realizing it came up with that sentence itself…

(it makes sense because currently these are two different instances of the AI tutor - one that builds the sentence and one that gives the feedback, and there’s no connection between them, so the second one doesn’t know that it’s an AI that gave the original sentence. Perhaps I should tell this to it in the system prompt…)