This is just as accurate as Mokuro if you have any experience with it (because it literally just uses the mokuro output), from my experience it’s pretty good even with challenging source material. Of course there are mistakes but I definitely wouldn’t say it’s “nearly useless”, but you can gladly try it out on your own source material. Of course the higher the resolution the better the result will be

Thanks, I just tried giving it a go! I installed python 3.12 as recommended. Something went wrong running the tool - I got a couple errors (below). Does this look like something on my end? To keep it simple, I dumped all of my manga JPG files into one folder, ran it, then got an empty CSV file as output:

Code

C:\Users\zan>jpvocab-extractor --type manga C:\Users\~~\ALL_FILES

2025-01-07 02:17:44,722 | INFO | root - Extracting texts from C:\Users\~~\ALL_FILES...

2025-01-07 02:17:44,722 | INFO | root - Running mokuro with command: ['mokuro', '--disable_confirmation=true', 'C:/~~/ALL_FILES']

2025-01-07 02:17:44,722 | INFO | root - This may take a while...

Traceback (most recent call last):

File "<frozen runpy>", line 198, in _run_module_as_main

File "<frozen runpy>", line 88, in _run_code

File "C:\Users\zan\AppData\Local\Programs\Python\Python312\Scripts\mokuro.exe\__main__.py", line 4, in <module>

File "C:\Users\zan\AppData\Local\Programs\Python\Python312\Lib\site-packages\mokuro\__init__.py", line 3, in <module>

from mokuro.manga_page_ocr import MangaPageOcr as MangaPageOcr

File "C:\Users\zan\AppData\Local\Programs\Python\Python312\Lib\site-packages\mokuro\manga_page_ocr.py", line 7, in <module>

from comic_text_detector.inference import TextDetector

File "C:\Users\zan\AppData\Local\Programs\Python\Python312\Lib\site-packages\comic_text_detector\inference.py", line 14, in <module>

from comic_text_detector.utils.io_utils import imread, imwrite, find_all_imgs, NumpyEncoder

File "C:\Users\zan\AppData\Local\Programs\Python\Python312\Lib\site-packages\comic_text_detector\utils\io_utils.py", line 11, in <module>

NP_BOOL_TYPES = (np.bool_, np.bool8)

^^^^^^^^

File "C:\Users\zan\AppData\Local\Programs\Python\Python312\Lib\site-packages\numpy\__init__.py", line 427, in __getattr__

raise AttributeError("module {!r} has no attribute "

AttributeError: module 'numpy' has no attribute 'bool8'. Did you mean: 'bool'?

2025-01-07 02:17:55,287 | ERROR | root - Mokuro failed to run.

2025-01-07 02:17:55,287 | INFO | root - Getting vocabulary items from all...

2025-01-07 02:17:55,287 | INFO | root - Vocabulary from all: , ...

2025-01-07 02:17:55,287 | INFO | root - Processing CSV(s) using dictionary (this might take a few minutes, do not worry if it looks stuck)...

100%|████████████████████████████████████████████████████████████████████████████████████| 1/1 [00:00<00:00, 79.32it/s]

2025-01-07 02:17:55,318 | INFO | root - Vocabulary saved into: vocab_all in folder C:/~~/ALL_FILES

Could you run “pip list” and check the version of numpy you have installed? Thank you!

EDIT: If you notice it’s v2.x or above, try this command:

pip install numpy==1.26.4

That fixed the error, thank you!! It’s processing - not done yet - but as it looks like it’s going to take quite a while to finish the whole thing I figured I would give an update now!

Thanks a lot, I’ll add a note on the page with the fix, the error appears to be caused by an update to some of the packages I’m using so there’s nothing I can currently do to fix it directly sadly.

And yeah, processing the manga with mokuro can take a long time. If you have a compatible GPU you might be able to install PyTorch in order for mokuro to make use of it: Start Locally | PyTorch I can’t really provide much help with this though sadly, as far as I know mokuro should just automatically use it once it’s installed and working correctly.

Interesting I’ll look into it after this batch. Thanks!

All good - I’d rather that the tool take a long time if it helps with accuracy rate.

I was messing around comparing JPDB and this tool.

Sentence: こんな料理上手なお母さんを持って幸せなんだから分かってるのか?

JPDB: こんな, 料理上手, お母さん, を, 持つ, 幸せ, なんだ, から, 分かる, のか

japanese-vocabulary-extractor: こんな, 料理, 上手, だ, 御, 母, さん, を, 持つ, て, 幸せ, の, から, 分かる, てる, か

Which do you guys think is better?

JPDB seems to be focused on forming longer words, while this tool seems to be breaking into smaller components.

Can’t tell which is better. Both seems OK in their own way.

After some thought, I think what I would really like is:

- “お母さん” from JPDB instead of “御, 母, さん”

- “料理, 上手” from japanese-vocabulary-extractor instead of “料理上手”

“お母さん”, “料理”, “上手” are marked as “common” in Bunpro, so there is likely some flag in JMDict or something.

Maybe split by longest “common” words? Not sure if the underlying parser can handle this.

Common flag:

Hey! Really appreciate the feedback. Sadly, the parsing is done entirely by a library I have no involvement in and it’s way above my skill level… it would be possible to replace the parser I currently use with another one, you’d only need to change the “tokenizer.py” file. However, this would require a fair bit of research into comparing different parsers to make sure they really work better and do that you want them to. Sadly I don’t think checking with bunpro data is really feasible as this would slow the program down by orders of magnitude… I haven’t had much time to work on this lately but if you find out more about this feel free to let me know and if I ever get back to it, I’ll keep it in mind!

So, I’ve done more research and realized the following.

- What is done in japanese-vocabulary-extractor is to split into morphemes.

- For learning vocab, we probably want Bunsetsu (words).

Good explanation at:

I found a Python library that can do this: GitHub - megagonlabs/ginza: A Japanese NLP Library using spaCy as framework based on Universal Dependencies

Code:

import spacy

import ginza

nlp = spacy.load("ja_ginza_electra")

doc = nlp("こんな料理上手なお母さんを持って幸せなんだから分かってるのか?")

for sent in doc.sents:

for bunsetsu in ginza.bunsetu_phrase_spans(sent):

# print(bunsetsu)

print(bunsetsu.lemma_)

Output:

こんな

料理上手

お母さん

持つ

幸せ

分かる

Analysis of results

- Results seem pretty good.

- If you are learning Vocab, there is no need to learn things like を, て, か, から. These are basically grammar.

- 料理上手 still exists, but I guess that’s too bad, it seems to be a common “word”.

Problems

- Haven’t figured out how to convert these to jmdict IDs.

- Library’s dependencies are a bit of a mess, so you might not want it in your application.

- No wheels for many Python versions, have to build from RUST on some Python versions, etc.

- Works for me on Python 3.11, with Visual C++ Redistributable on Windows.

After more testing, ginza works well on that first sentence, but is bad on other sentences.

Sentence: 私立聖祥大附属小学校に通う小学3年生!

Output:

私立聖祥大附属小学校

通う

小学3年生

Anyways, I realized that I’ve been fiddling with this too much. Could have spent the time on studying instead of working on tooling

I’m just going to pick one and stick with it and call it a day.

I know I said that I was going to stop fiddling, but I believe I found the best way.

Just use a LLM to do this.

For example, Google Gemini 2.5 Pro Preview 03-25 is free and performs pretty well.

System Prompt:

You are an AI agent that is to help English students learn Japanese.

Given a piece of Japanese text, break it down into a list of vocabuary words for the students to learn.

Do not return particles or proper nouns.

Ensure that the result is in order that they appear in the text

Remove duplicates.

Return all words, do not attempt to remove any.

# Example Input

私立聖祥大附属小学校に通う小学3年生!

# Example Output

{

"result": [

{

"Kana": "しりつ",

"Kanji": "私立"

},

{

"Kana": "ふぞく",

"Kanji": "附属"

},

{

"Kana": "しょうがっこう",

"Kanji": "小学校"

},

{

"Kana": "かよう",

"Kanji": "通う"

},

{

"Kana": "しょうがく",

"Kanji": "小学"

},

{

"Kana": "ねんせい",

"Kanji": "年生"

}

]

}

Input is just the subtitles text for an anime.

Output:

{

"result": [

{

"Kana": "ひろい",

"Kanji": "広い"

},

{

"Kana": "そら",

"Kanji": "空"

},

{

"Kana": "した",

"Kanji": "下"

},

{

"Kana": "いくせん",

"Kanji": "幾千"

},

{

"Kana": "いくまん",

"Kanji": "幾万"

},

{

"Kana": "ひとたち",

"Kanji": "人達"

},

{

"Kana": "いろんな",

"Kanji": "色んな"

},

{

"Kana": "ひと",

"Kanji": "人"

},

{

"Kana": "ねがい",

"Kanji": "願い"

},

...

All that remains is to figure out the JMDict IDs from the Kanji/Kana.

A little while ago I noticed someone forked my repository on github and I believe in this commit they changed to ginza in their fork: feat: freq info in card · Fluttrr/japanese-vocabulary-extractor@7db3383 · GitHub

Probably worth taking a look at, but I am too busy to test this out currently.

As for the LLM idea: This sounds great, but speed will likely be a huge issue, especially for big batches. I have no experience using LLM APIs with loads and loads of requests (I assume the input for each request will be quite limited), and I have no clue how running it locally would work… totally worth taking a look at though and I believe if people were willing to wait a while for their decks to be made, this could totally work.

As for the JMDict ID issue: I don’t think this should be an issue at all? It should be as simple as:

jam = Jamdict()

result = jam.lookup(word)

definitions = result.entries[0]

id = definitions.idseq

This is done in the dictionary.py file.

Why use a LLM for problems that are already solved faster and cheaper with existing solutions?

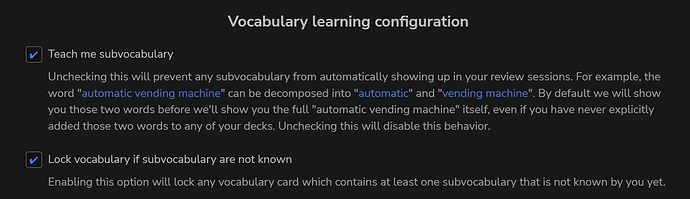

If you can create a big list of word with this tool, you guys can go into jpdb.io and create a new deck from text. This will create a big deck based on the inserted text. The huge advantage is that you can sort the words in jpdb chronologically in the original insertion order or based on their whole (in my opinion superior) corpus or if you have words multiple times in the deck based on local the frequency within the deck. Also, jpdb allows you to import existing anki decks and even progress while providing a superior experience (at least compared to base anki). And you can tell jpdb to break down words to subvocabulary before learning it, making learning more intuitive imo.

That was me. Yeah, I started fiddling around and quickly realized the tool produces too many false words due to overlap in readings, too granular splitting, etc. Also Mecab was not really maintained.

Ginza produced better results, also you can see I added some filtering by part of speech to exclude grammar stuff.

However I don’t really read manga at the moment, so I stopped investigating how I can improve further. Also didn’t reach a stage where I can make meaningful pull request back into your tool.

However with my adjustments it could go from importing manga to generating Anki deck, with filtering out known words from WK or arbitrary list (Bunpro has no public API so no luck there).

I guess you can get some ideas from that.

Bunpro has no public API but it has a Frontend API, on which both the web app and the mobile apps rely. I plan to do public Postman docs for this. You can join us here Bunpro API when?

In general I like the results of JPDB parser, and how it gives you the longest (?) match. For compound words and expressions that can be further broken down, one can click through from the dictionary entry.

If only there was a way to feed it fugirana available in the source text (epub/jpdb-reader/etc), so it can use that to disambiguate entries.

Yes! That is exactly what I got too.

It seems great at first, but after more testing, it wasn’t that good.

JPDB is pretty good, but I don’t like the fact that it returns things that shouldn’t be studied.

Example: 私立聖祥大附属小学校に通う小学3年生!

JPDB: 私立, 聖, 祥, 大, 附属, 小学校, に, 通う, 小学, 3年, 生

It doesn’t make much sense to add “聖祥大” to your reviews - It’s just a name.

Overall I think I have best success by splitting into vocab list with JPDB.

Then, sending the original text, and the JPDB vocab list to Gemini to clean up.

Task: Identify items that should be removed from the vocabulary list.

Use the Japanese text to understand the context of the items in the vocabulary list.

Only remove the following

* Particles

* Proper nouns, unless they are names of common objects or places.

* Grammar points

* Duplicates

Output:

* Return the list of items to be removed, together with the detailed removal reason.

{

"item": "聖",

"reason": "Part of a proper noun (私立聖祥大附属小学校)."

},

{

"item": "祥",

"reason": "Part of a proper noun (私立聖祥大附属小学校)."

},

{

"item": "大",

"reason": "Part of a proper noun (私立聖祥大附属小学校)."

},

Gemini is also able to remove extra stuff from JPDB output. (I didn’t bother to locate the original sentence).

{

"item": "ちゃう",

"reason": "Grammar point (casual form of 〜てしまう auxiliary verb)."

},

{

"item": "ない",

"reason": "Grammar point (negation suffix/auxiliary adjective)."

},

Perhaps it could be even link to Bunpro’s grammar points, but that’s not a priority for me (and probably won’t be doing it).

Maybe provide a list of Bunpro grammar points and the original text to Gemini, and tell Gemini to return a list of Bunpro grammar points.

But, you need to figure out how to programmatically insert the grammar point into a deck in Bunpro.