JPDB is pretty good, but I don’t like the fact that it returns things that shouldn’t be studied.

Example: 私立聖祥大附属小学校に通う小学3年生!

JPDB: 私立, 聖, 祥, 大, 附属, 小学校, に, 通う, 小学, 3年, 生

It doesn’t make much sense to add “聖祥大” to your reviews - It’s just a name.

Overall I think I have best success by splitting into vocab list with JPDB.

Then, sending the original text, and the JPDB vocab list to Gemini to clean up.

Task: Identify items that should be removed from the vocabulary list.

Use the Japanese text to understand the context of the items in the vocabulary list.

Only remove the following

* Particles

* Proper nouns, unless they are names of common objects or places.

* Grammar points

* Duplicates

Output:

* Return the list of items to be removed, together with the detailed removal reason.

{

"item": "聖",

"reason": "Part of a proper noun (私立聖祥大附属小学校)."

},

{

"item": "祥",

"reason": "Part of a proper noun (私立聖祥大附属小学校)."

},

{

"item": "大",

"reason": "Part of a proper noun (私立聖祥大附属小学校)."

},

Gemini is also able to remove extra stuff from JPDB output. (I didn’t bother to locate the original sentence).

{

"item": "ちゃう",

"reason": "Grammar point (casual form of 〜てしまう auxiliary verb)."

},

{

"item": "ない",

"reason": "Grammar point (negation suffix/auxiliary adjective)."

},

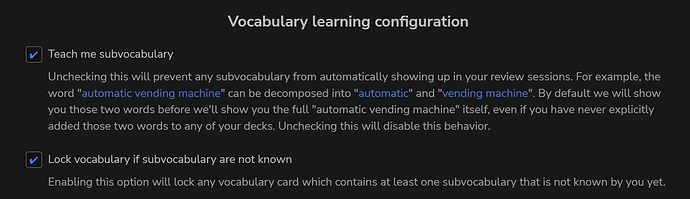

Perhaps it could be even link to Bunpro’s grammar points, but that’s not a priority for me (and probably won’t be doing it).

Maybe provide a list of Bunpro grammar points and the original text to Gemini, and tell Gemini to return a list of Bunpro grammar points.

But, you need to figure out how to programmatically insert the grammar point into a deck in Bunpro.