Hi everyone. I was wondering how accurate the JLPT practice tests are compared to the actual test. Do the scores line up pretty well? How close are the practice tests with regards to difficulty, pacing, and timing? I’m really concerned with the listening section. I feel like the listening part flies by when I’m practicing, and I’m kinda worried the real test will be the same. Basically, I just want to know if my score on the practice tests is a good reflection on how I’ll do on the actual test. Thanks a lot for the help!

Haven’t tried the listening or reading sections of the tests yet but from personal experience the vocab and grammar are really close to the real tests . The only thing missing is test pressure and the annoying cumulative three or four minutes you lose manually filling in the test sheet. Also, the actual JLPT scoring algorithm is super weird so aim to get 70-75% of the questions on the practice test correct. 60% probably wouldn’t be enough.

There is no way to line up the scores of the practice tests with the real tests unfortunately as the actual tests are mysteriously weighted. No one knows how the weighting is done, afaik. Therefore you should aim for a decently high pass rate on any practice test, as Beghaus said.

In terms of pacing and timing, I think they are very good. In terms of difficulty, I am not super sure. I passed an n2 practice test with 75 percent which I think is a bit above my actual ability, but I could have also gotten lucky with the contents of that particular test.

This is all really good advice. I’ll shoot for 70% and go from there. I appreciate all the help and perspectives!

To add to @cafelatte’s point, the Japan Foundation’s information page on this states that:

Calculation of test scores not affected by the difficulty of exams (scaled scores) is based on a statistical test theory called Item Response Theory (IRT). This is completely different from calculation of raw test scores based on the number of correct answers.

Scaled scores are determined mathematically based on “answering patterns” of how examinees answer particular questions (correctly or incorrectly). For example, a test consisting of 10 questions (items) has a maximum of 1024 answering patterns (210 patterns).

And

[…] scaled scores may not be identical even though the number of correctly answered questions is the same when answering patterns are different.

There’s a bunch of studies attached to the page I linked, but iirc none of them actually indicate (in a satisfying manner) how the Japanese Foundations corresponds the ‘answering patterns’ to their scoring system.

So while I often seen folks posit that ‘harder’ questions might be weighted more (ie give you more points), my reading of it is that it is completely possible that ‘easier’ questions might actually give you more points, depending on how they score the answering patterns.

The TLDR is to not get too attached to how you score (whether high/low) on the practice tests, because the JlPT scoring system is much too opaque for the practice tests to reflect. This also applies on the actual test itself - like to not freak out if you feel that you are getting a lot of them wrong, because (within reason) it’s your answer pattern that matters, not the number of questions you get right/wrong. (Off the top of my head, there are also questions included in the actual tests that are used for calibration etc, and not included in your actual score).

As an aside, if you are interested in seeing (purported) past JLPT papers, they are some google doc links floating around on reddit.

As an experiment, I just did the first practice N1 test and got about 70 percent for the vocab and grammar but I perfect 100%ed the whole reading section so ended up with a 85% overall on that particular test. It took 1 hour 10 minutes, so I had 40 minutes remaining.

For reference, in the real JLPT N1 in July 2025, I got something like 30/60 for the vocab/grammar and 35/60 for the reading and I remember that I ran out of time pretty dramatically. So my raw score was 54% and I had no time to spare, where as I had 40 minutes left on this practice test. So clearly there is a big difference. I don’t think my skills have improved that much in a few months which suggests to me that while the Bunpro tests are not bad, they are probably on the easy side (to the extent of being a bit redundant if I’m getting all answers correct on the reading.)

So, I would say the reading portion was way too easy on that particular test and I definitely noticed that while doing it. The vocab and grammar seemed fairly close to actual difficulty. I will try the other N1 tests at at later date and see how I do.

Edit - In particular, I felt the answer were far too obvious while the actual text seemed a lot closer to N2 than what I have experienced in N1.

In particular, I felt the answer were far too obvious while the actual text seemed a lot closer to N2 than what I have experienced in N1.

Yeah, I gave them the same feedback in the announcement thread. The actual test has multiple answer that are kinda right and you have to pick the one that’s the most right, but on the one I took on here, I could often just read the first few words of each answer and know which one had anything to do with what the passage was about. And yeah, the vocabulary and grammar were much easier than the past tests/other practice tests I’ve done. It’s been years since I took N2 but that’s about the difficulty it felt like to me as well.

I took all JLPT N2 mock tests. I feel the same; I think the reading sections were slightly on the easier side, and the vocab/grammar and listening sections were more challenging.

They did say that the first batch of tests are on the easier side. From the announcement page: Bunpro JLPT Tests! New Feature, Sep 20th 2025

We believe this first batch of five tests generally vary from slightly on the easy side, to level accurate. In the future when we release another batch, we will aim to make them slightly on the harder side and put an indicator on each test showing their relative difficulty so that users can get a better gauge of their ability.

Still, I think it’s a great feature!

Agreed RE: the reading easiness - I failed N2 with the lowest score (20) in reading in July, and just dropped 2 questions over the whole reading section of one of the N1 tests. I had previously done one N2 test and the readings felt way too easy on that so I moved up 1.

I’d say the text difficulty is probably N2ish (and even on the easier side compared to some readings in SKM and past papers) - but the correct answer option is far too obvious in the questions compared to the real thing.

I often find on the tests there are two options that can seem right, and you really need to bury down into dismantling each sentence to figure out which is right, and which is a little off.

I’d say that the vocab and especially grammar was pretty difficult for me, which is unsurprising as I haven’t actually studied most N1 material!

Nevertheless - if we park the level misalignment, these tests are still a great resource for practicing the format. The principle of looking over your mistakes and learning / revisiting what may have caused them holds true whatever the level is!

This is all really great information, for real! Have any of you used a practice test outside of Bunpro? Like are there any particular sources that you would recommend?

Official workbooks (which are essentially practice tests) are available for sale. They’re not crazy expensive. I have used them before.

I am pretty sure most other websites that provide tests are illegally using past tests, tbh. And the JLPT Ts and Cs explicitly say your results will be nullified and you will be banned from taking the JLPT if you use those materials. Now whether that’s a statement with any teeth to it is a different matter… but worth being aware of.

One way it’s not like a real test -

I don’t think I’ve seen a single, fake kanji in the practice tests. Kanji like 時 but instead of 日寺 it’s 虫寺. Basically kanji that don’t exist show up sometimes on practice tests outside of Bunpro.

I think its one of those things thats like impossible to prove, but if someone is somehow dumb enough to be flaunting around a past test to the level that they become aware of it, then like yea.

Because dont most practice tests that get sold say something along the lines of like “questions reflect prior questions as seen on the JLPT” or something? As in, some of the test questions are directly taken from prior tests? I thought I had seen something like that on my Japan Times JLPT books.

I’m not sure, I’m just thinking of the official workbooks sold by the Japan foundation for around 800 yen

(these ones: Japanese-Language Proficiency Test Official Practice Workbook | JLPT Japanese-Language Proficiency Test)

I didnt know Japan Times did workbooks as well.

But also I think “reflect” means more like “embody” as in “these are in the spirit of the past jlpt questions as much as possible.” So they’re not directly taken from the tests.

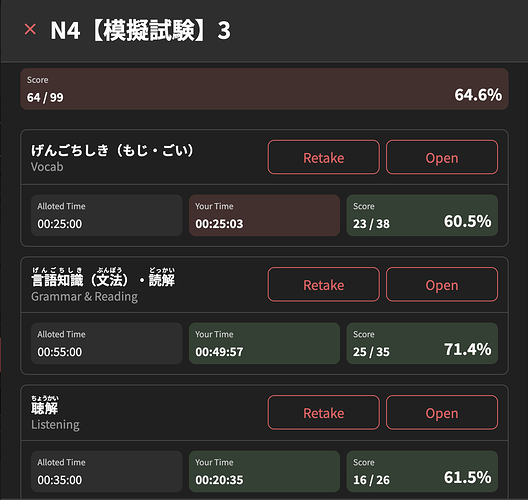

This is my practice test result. A big thing I learned is to not second guess myself. Go with your first answer and stick with it. I did the listening at a faster pace than the actual test. I didn’t pause at all. Filling in the blanks with random vocabulary words was tough, but I was pleased with how well I did on some problem sets. Overall, I think this test score reflects how I felt about the whole thing: I wasn’t stellar but I also wasn’t a deer in the headlights.