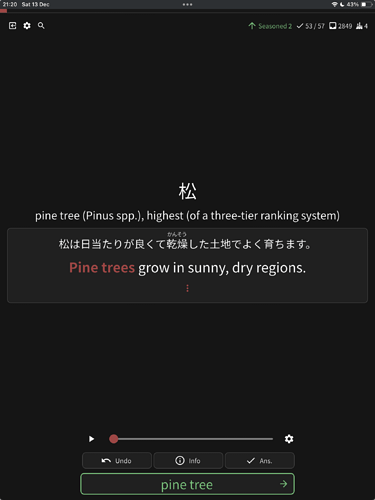

Something weird is going on with cram right now. I can’t figure out how it works exactly, but if I set up a cram with a single vocab item and turn on “complete mode”, I get tested only three times, not twelve. If I set up a cram with three vocab items and turn on “complete mode”, each item gets tested four times, not twelve. Grammar items seem to work normally, both when setting up a cram with only grammar items and when setting up a cram with a mix of grammar and vocab items. At all times, it’s the vocab items that don’t get tested the appropriate number of times.

[Edit] I just realised cram gives me exactly the three sentences that I’m allowed to manually create ghost reviews for. There might be a connection there.

This never happened before.

This never happened before.