All rabbit words will provide equal experience (regardless of “rarity”  ).

).

Basically source-of-study and rarity don’t make any difference to amount of XP received~

Great, so that provides yet another incentive to learn about as many types of rabbits as possible!

I will study hard for the 2025 日本語バニー力試験

For some of the topical decks (eg: a particular song, engineering terms, etc.) I really like that I can skim through and pick out the vocab items relevant to the JLPT level I’m studying for.

Or, there are certainly ones I’ve stumbled upon and been like “wow! that’s really good!”. It feels empowering to add stuff I want to learn into my queue like that.

@semanticman @haldo @casual @Flutter @DeclanF @craze1x @Magyarapointe @JamesBunpro @simias @eefara @lantana

Just mass-tagging anyone that might be vaguely interested, but:

The Import feature has been (silently) released!

Will likely have a post up about this soon, but until then, would love for people to try break it and hear first impressions etc.!

FYI next on the list is adding “Add to Deck” buttons in places like:

- The Search page

- The Grammar/Vocab pages themselves

- Anywhere else you think might be a good place

Importing my Non Non Biyori deck I posted earlier (both using JMDict IDs and regular words) gave me an error of “Something went wrong when finding vocab matches!” and there’s no option to skip them or anything, I’m just stuck. I think if these errors happen it should probably just skip those words because it’s impossible to tell which of the words is causing the error and even if you gave the choice to resolve the conflicts, this sort of behavior makes importing decks incredibly tedious.

I also don’t like the import limits. The Aria Manga deck I posted has almost 8000 entries and the first volume actually contains almost 1000 words, which would hit the unit limit. I could see why you might specially want manga to only have decks for each separate volume with units for each chapter, but generating these automatically is honestly quite the pain. Importing decks for longer novels would also just be made much harder by this, and my Non Non Biyori deck also hits the 2000 word limit.

Hi again! Thanks for testing the feature.

I’m guessing this might actually be a timeout issue (especially if the request errors after exactly 30 seconds).

Can you DM me the list of words? Or a link to the list of words?

I’ll take a look into this for you.

- Non Non Biyori S1 with JMDict IDs: Vocabulary list for Non Non Biyori Season 1 using JMDict IDs · GitHub

- Non Non Biroyi S1 with regular words: Vocabulary List for Non Non Biyori S1 · GitHub

- Aria/Aqua Manga with JMDict IDs: Vocabulary list for Aqua+Aria using JMDict IDs · GitHub

- Aria/Aqua Manga with regular words: Vocabulary list for Aria/Aqua · GitHub

Here, I posted them a bit higher up. And yeah, it could very well be a timeout, it did take approximately 30 seconds. Even only importing the vocab for Episode 1 of Non Non Biyori caused the same error.

I ran into the same error while importing a 4500 vocab list in batches. At first, I did small random batches (small : under 500), and whenever I ran into the error, I just closed the import window, opened it again, the list was still there, I ran the import again, and it worked.

But then Bunpro ate my whole deck, and I was bored with manual copy-pasting, so right now I split the original file in 3 files of 2000 lines and some… and what I did earlier doesn’t work

I’m in the process of trying again with smaller batches

Also, I first tried not with JMDICts ID but with words, and Bunpro couldn’t find 12 out of the first 134. Some were not “real” words (@Flutter さん, your wonderful jpextractor program extracted られる by itself, for example, and in a word list, Bunpro couldn’t parse that), but others were only alternate, old style writing (其の, 取り敢えず).

When using JMDICTs ID instead of words, with the same vocabulary list, I had a single non-matched word, which was found in a second attempt

Looking into this now.

Thought it might be the search building up its cache, but now I think it it’s specific Vocab results that are breaking it.

Will do more investigation.

EDIT: Actually I think it might still be the cache building itself.

Hol up cowboys

EDIT : Bunpro didn’t “eat’” my deck, I realised I forgot to save each unit one after the other… I wish there were a general “Save All” button … A big one, in red, at the bottom of the page !

Trying right now in 500-ID batches : the first one didn’t go through, but re-running the import once solved it ; the second batch didn’t go through, I had to re-run the import twice to get it to work; the third batch… refuses to go through, even after multiple runs.

I will try now with 250-batch list…

(btw : I actually don’t know much about CLI, or at least not enough… Does some one know how I could automate printing the ouput of jpvocabularyextractor to several files ? Right now, I’m using

sed -n ‘2,500p’ vocab.csv > file1.txt

then manually change to

sed -n ‘501,1000p’ vocab.csv > file2.txt

etc, etc… but with big files, it gets old fast. Any tip ?

Definitely the cache thing.

@Flutter Tried a few times and, now that it’s all cached, ‘Non Non Biyori S1 with JMDict IDs’ finishes in 3.7s.

Gonna see if I can make more performance improvements.

(Gonna have to before officially releasing anyway).

Noted!

I’ve just tried with 250-batch lists : all needed to be re-run, but only once, and then they went through

thanks !

Also, I’ve tried mix-and-matching : importing a 250-batch ID list, then a 250-word list, then an ID list again : the JMDICT ID lists work much better. The word lists always had around 15 words per 250-batch that weren’t recognised, the ID lists had none.

Importing Episodes 1-9 worked instantly, but trying to import 10-12 only worked after 4 tries. I’m wondering though, what does happen with vocab it doesn’t recognize? Is it skipped over? My program definitely spits out some stuff not commonly found in most dictionaries, but they’re all verified by JMDict so is the error message just misleading?

but trying to import 10-12 only worked after 4 tries

Probably needs more attempts (time caching).

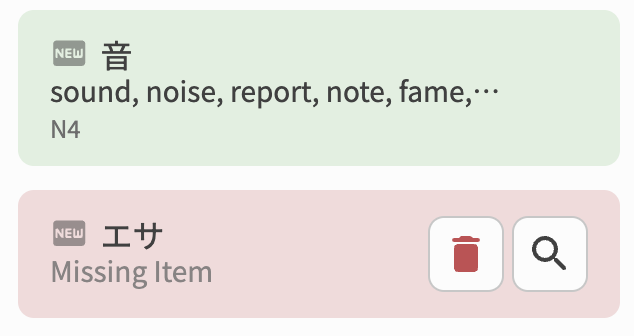

If it can’t find something, basically you can either remove the items or manually search/replace.

So like:

We’re working on the indexing/caching stuff now.

I’ll report back when it’s in a more ready state for your purposes (very large 5000+ volumes of content).

Thanks! Also, is there any chance of lifting the 500 word unit limit? Otherwise I have no clue how I’d import manga other than painstakingly separating chapters or fragmenting volumes into 500-unit chunks.

I just did this for all seasons of Non Non Biyori, if you do it episode by episode it’s actually okay. Some of the episodes got very close to the 500 word unit limit though so I think even for anime this could be a problem. I submitted them all for review to be published, thank you so much for this amazing feature!

Well I was going to suggest using this to import some anime vocab lists and starting an anime watching club but it looks like Flutter has already done that.